Microsoft acquired the game developer Activision Blizzard for $68.7 billion and cited the metaverse as a reason for acquiring it. Facebook’s founder, Mark Zuckerberg, has also bet on the metaverse and renamed his social networking company Meta. Google has worked on metaverse-related technology for years. And Apple has its own related devices in the works.

The above statements can give a clear idea that many tech giants have realized the future of Metaverse and have started developing a strong hold in the domain, given Metaverse is still in a nascent stage.

However, Metaverse is not a completely new concept; it is often described as the world’s Digital Twin, AR CLoud, the Mirror World, the Magicverse, the Spatial Internet, or Live Maps. And, the 2003 virtual world platform Second Life is often described as the first metaverse. Second Life is an online multimedia platform that allows people to create an avatar for themselves and have a second life in an online virtual world.

Now, to deliver sharable and persistent metaverse-based content, a synergy of numerous technologies is required including

Currently, advancements in these technologies are taking place to implement metaverse on a larger scale. However, some limitations, specifically the problem of mapping the real world in the virtual world using sensors, still need to be addressed so that the metaverse connects more on a personal level. In addition to the challenges in SLAM, there are other areas that need to be worked on so as to enable metaverse. You can refer to the image below for the same.

In this article, we will be focusing on one of the biggest problems in implementing metaverse- Simultaneous Localization and Mapping (SLAM), the companies that are working to solve this problem, and some companies addressing issues other than SLAM.

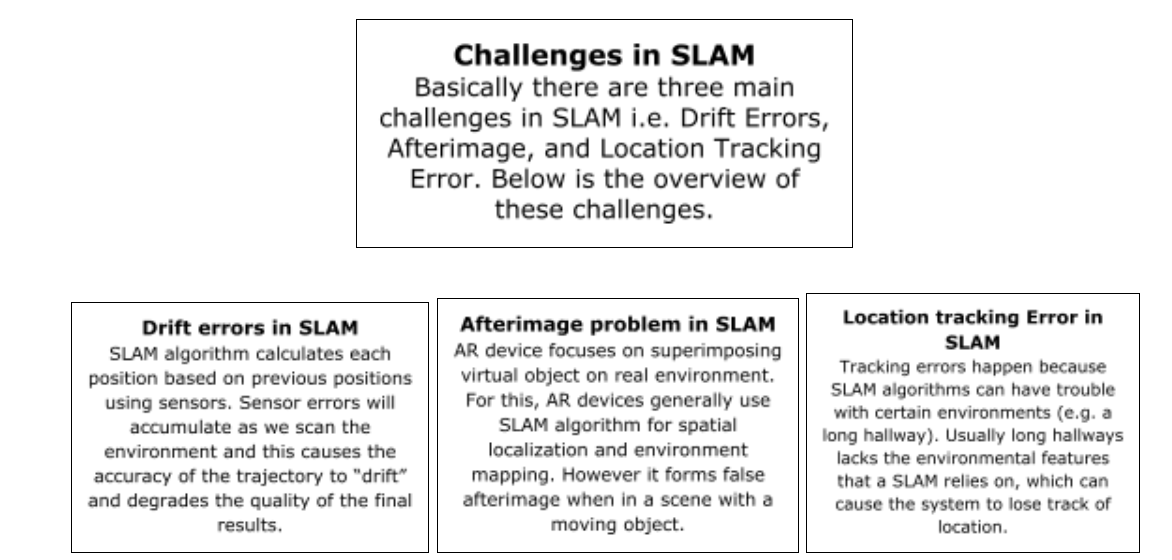

What are some of the challenges of SLAM in the space of Metaverse?

The SLAM industry has been progressing at an astounding level over the last 30 years. This has enabled large-scale real-world applications of this technology. For example, Tesla’s Autopilot is using SLAM by allowing the sensors to translate data from the outside world into the car’s head computer. Further, this information is compiled to present a virtual projection of the surroundings, hence, helping to avoid crashes and keeping the driver informed about the journey.

Now, this ability to instantly redirect the environment to a virtual, high-quality replica is indeed something that will be the core challenge for businesses that want to focus on VR and AR. Let us have a look at some of the challenges of SLAM that the industry needs to address so that Metaverse can come to a personal level –

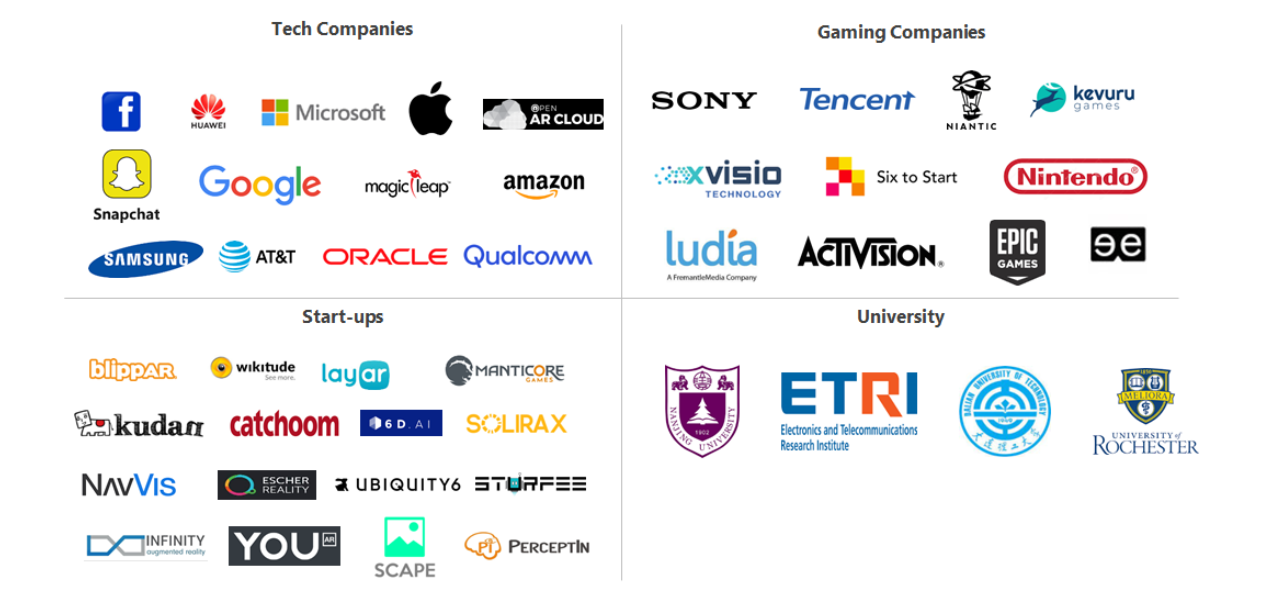

Various Companies and their solutions to the SLAM Technology Challenges

It is expected that SLAM will be at its peak in the near future, given the solutions that companies are offering to the above challenges. Below is the list of entities researching to solve SLAM technology challenges:

Though it is not viable to discuss all the entities altogether in one article, let us have a look at some major companies and their solutions to the above challenges.

SONY – Providing solution to drift errors in SLAM using IMU Sensors

- SLAM is one of the core technologies required for Metaverse, and drift error is one of the important challenges that need to be overcome. Sony has been researching to solve this problem and has come up with multiple solutions –

In 2019, they came up with the idea of using thermal beacons and IMU sensors to rectify drift errors in SLAM to increase the accuracy of location in a video game. The user device detects multiple thermal signals emitted by thermal beacons placed on walls. Based upon these signals and data from the IMU, the location of the user device is determined. Sony was also granted a patent for this solution (US10786729B1).

- Drift error in SLAM also leads to difficulty in building 3D maps from 2D images captured from the camera. Sony has also come up with a solution to this.

3D image generation may include estimation of the position & orientation of the camera where the 2D image was captured. A joint relationship between the 3D map and the position & orientation of the camera may form a transformation from the 3D map to the 2D image. It may be possible to reproject a 3D point of the 3D map into the image frame. Reprojecting the 3D point of the 3D map in the image frame may result in a reprojection error. This reprojection error may be expressed in pixels and corresponds to a distance from the reprojected 3D point from the 3D map to the corresponding point position in the image.

Sony has developed a method that includes determining the respective reprojection errors of the reprojection points and then performing adjustments to update the 3D map. Sony has got a patent for this solution as well (US20200334842A1).

NAVVIS – Using Precision SLAM Technology to solve drift and other errors

NavVis, a start-up that is one of the leading global providers of indoor spatial intelligence solutions, has come up with some solutions to solve drift and other errors in SLAM. Below is a list of technologies that aim to solve these errors.

- Loop Closure Algorithm – Loop closure algorithm is the act of correctly asserting that a device has returned to a previously visited location. This method includes maintaining a list of prior orientations and comparing a user’s present view with a complete set or a subset of views that were previously explored. Based on the comparison, the spatial map of the environment is updated and the drift error is reduced. Using this algorithm, NavVis stated that the accuracy of 20mm can be achieved at 95% confidence (Source) which is quite high compared to 0.6-1.3% while using stereoscopic cameras; i.e. the location could be 60-130 cm away from the real location of the object. (Source)

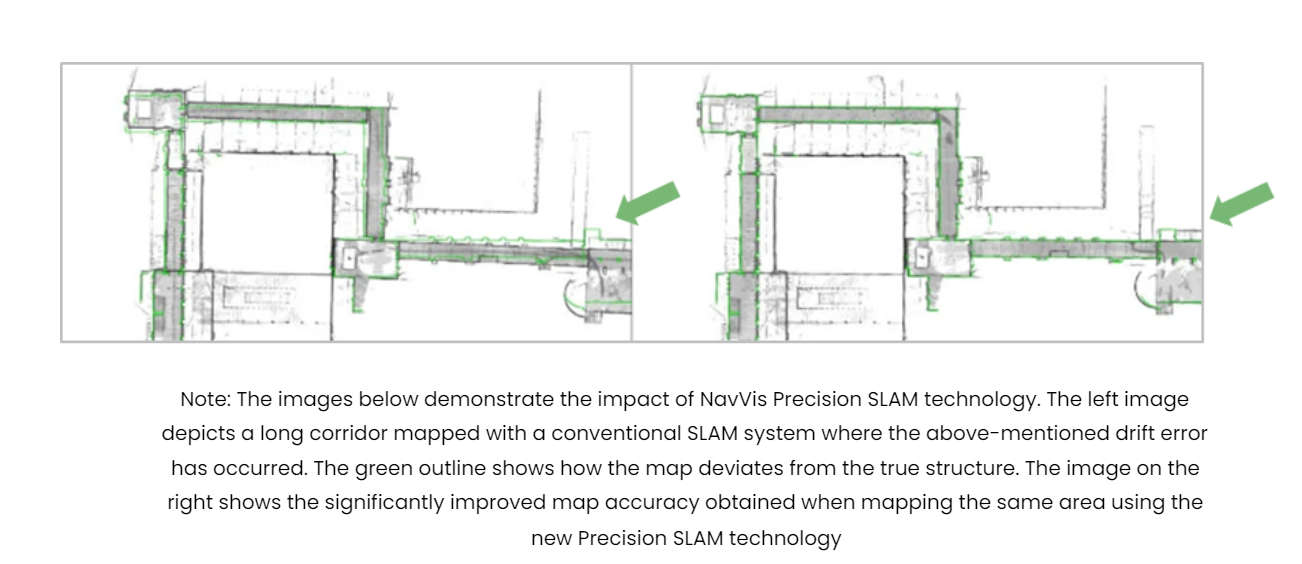

- Precision SLAM Technology – NavVis is also working on another algorithm, i.e. Precision SLAM Technology, which significantly reduces drift error and improves the SLAM accuracy. NavVis points out that the Precision SLAM Technology is especially evident when the loop closure technique has little effect or cannot be used. They are also seeking a patent for this technology (EP3550513A1)

- Navvis has also filed a patent (EP2913796A1) to remove the parallax error from the 3D model of the building. For this, the system creates a point cloud with the help of signals from a laser scanner in conjunction with images from multiple cameras. Finally, a 3D model of the building is created from the point cloud which is free from parallax error.

- Navvis is also researching a technology through which a 3D model of a captured object space can be generated using multiple scanners mounted on a mobile apparatus. Seeing the advantages of this solution to precisely map indoor environments such as buildings, Navvis has also gone for its patent rights. (US20210132195A1)

Huawei – Using a Global sub-map to solve the drift error in SLAM

- To reduce the drift error in SLAM Huawei came up with a solution in which the posture and positioning of the virtual object are calculated by the SLAM system using the video image collected by the camera and the global sub-map (which is a sub-map of the location of an electronic device in the global map). Maps and collected video images can be used to estimate the pose of electronic devices, which can effectively reduce or even eliminate pose drift.

- Huawei is also seeking a patent for this solution (WO2021088498A1). In addition, they would be protecting patent rights in different jurisdictions like Japan, Germany, Denmark, etc.

NANJING UNIVERSITY – Making use of the AGAST-FREAK algorithm to solve the drift error in SLAM

- To solve the drift error in SLAM, the University is researching a solution in which a mobile camera captures the real scene image and uses the AGAST-FREAK algorithm to extract feature points in the captured image to complete scene map initialization. Then, camera pose information is located and a local scene map is constructed using the extracted feature points and IMU data. Next, a global map is built, and keyframes are inserted into the global map to expand and optimize the global map. Finally, real-time positioning of the camera and precise registration of the virtual object is done according to the feature points in the captured real scene image. Nanjing University has also gone for patenting this solution (CN110111389A).

PERCEPTIN – Using the inertial data to solve the drift error in SLAM

- Perceptin developed a system that includes a mobile platform, a camera, and an IMU wherein, the system receives the location of the mobile platform including the view direction (Initial pose) of the camera. The system updates the initial pose using inertial data from the multi-axis IMU to generate a propagated pose before receiving a second camera frame. Further, the system corrected the drift error between the propagated pose based on the inertial data and an actual perspective of a new pose. This is done by estimating an overlap between the successive camera frames to reduce computation requirements, correlating the new frame with a previous frame by 2D comparison of the successive camera frames. Looking at the improved speed and the accuracy, Perceptin has also patented this solution in many jurisdictions like China, Europe, and the US (US10043076B1).

Companies addressing issues other than SLAM errors to implement Metaverse

Niantic

Niantic first launched its AR Cloud back in 2018. The platform, which was previously known as the “Niantic Real World Platform,” is also getting a new name: Lightship. This platform creates a mesh via a smartphone camera. However, there is one flaw in this platform, i.e. persistent content. Now, to improve this problem, Niantic acquired Escher Reality. The company helps Niantic accelerate its work on persistent and shared AR.

However, the platform is designed only for phones and not for headsets. But, Niantic’s recent collaboration with Microsoft states that maybe they are working in the direction of designing this platform for headsets also. (Source)

Recently, Niantic filed a patent (US20210218950A1) which talks about the method of training a depth estimation model using depth hints. Depth estimation/sensing is used in both navigation and scene mapping.

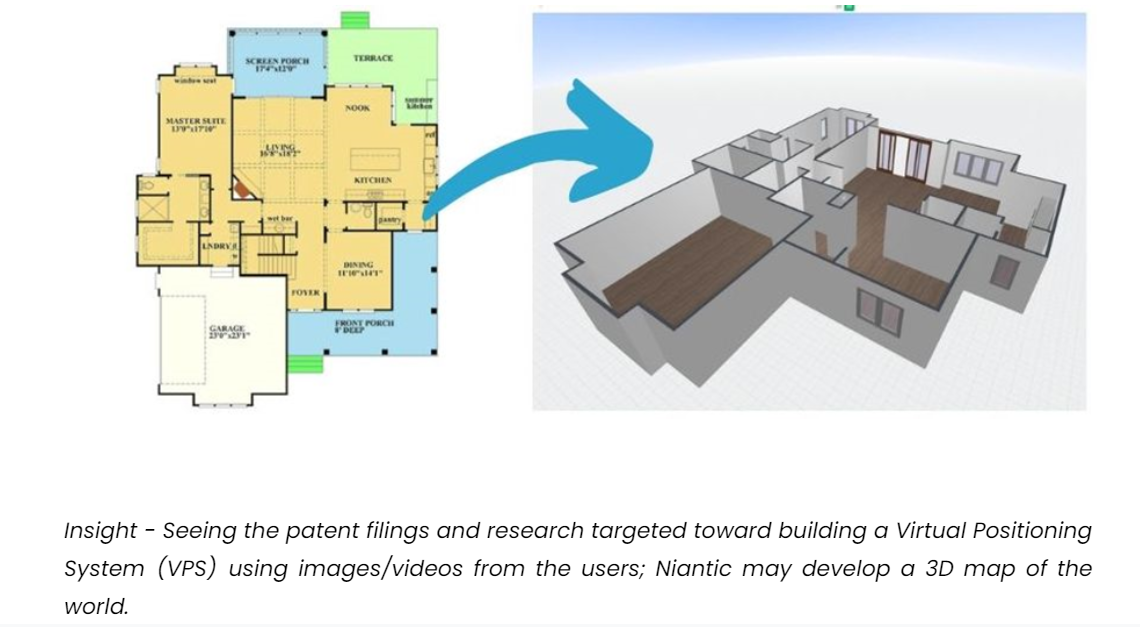

Niantic also filed some patents like US20210187391A1 on point cloud which solves the problem of the inaccurate mapping of the real-world and determining location of player’s mobile devices. In this, they are combining map data from multiple user devices to generate a 3D map of the environment based on the common feature points of different image data.

Lightship scans the real world and maps it for AR too, without needing LIDAR. Niantic also acquired 6D.ai, which can map 3D landscapes and crowdsource world maps with regular phone cameras, and it’s being used in Lightship’s software toolkit.

In 2020, Niantic also acquired Matrix Mill, which helps them improve their scene mapping capabilities. Talking about this technology, Niantic researchers recently revealed a technology that can transform any smartphone into a 3D mapping tool.

Technology to Transform Any Smartphone Into a Powerful 3D Mapping Tool

Recently, Niantic researchers revealed a New Depth Technology to Transform any Smartphone Into a Powerful 3D Mapping Tool. Specifically, this technology infers the 3D shape hidden in the 2D image and determines the depth of objects from a single 2D image. In addition to this, Niantic is receiving videos and images of real-world locations from users and is also working on a Virtual Positioning System (VPS) to build a 3D map. Niantic has also filed a patent US20210082202A1, on a similar line of 3D mapping using 2D images.

Insight – Seeing the patent filings and research targeted toward building a Virtual Positioning System (VPS) using images/videos from the users; Niantic may develop a 3D map of the world.

Multiplayer game Codename: Urban Legends on Verizon’s 5G Mobile Edge Computing Platform

In collaboration with Verizon, Niantic launched a demo of the large-scale multiplayer game Codename: Urban Legends on Verizon’s 5G Mobile Edge Computing Platform. Niantic is using Verizon’s multi-access edge computing (MEC) or edge servers, which will be able to manage AR content on a massive scale. We also observed that Niantic has introduced a Planet-Scale AR Alliance with different telecom operators operating in various countries such as Deutsche Telekom from Germany, Orange from France, SK Telecom from South Korea, and Telus from Canada, Softbank from Japan, and Verizon from the US. From this, there is a possibility that Niantic is planning to launch the 5G enabled Games in different jurisdictions around the world. In addition to this, Niantic has also filed a patent US20200269132A1 related to Mobile Edge Computing.

Insight – The launch of the multiplayer game Codename: Urban Legends and the introduction of the AR Alliance, which includes top telecom providers from different parts of the world. So, it may be a possibility that they are planning to launch the game in different jurisdictions of the world by using the 5G service of these telecom operators.

Seeing this strategy, it can be concluded that collaboration from telecom companies (especially for their 5G service) can play an important role in making any AR Cloud-related game successful.

YOUAR

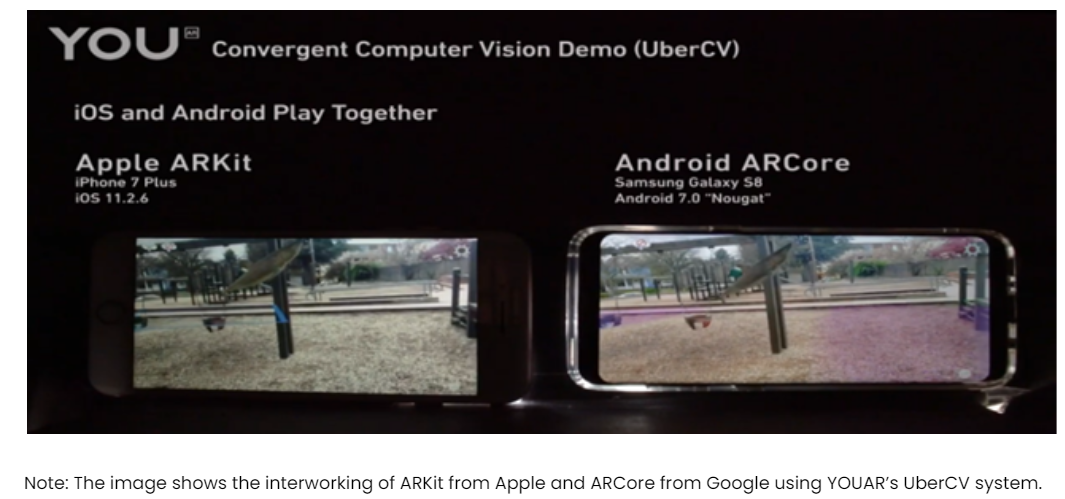

YOUAR, spun off from Bent Image Lab and incepted in 2015, provides the tools to make Augmented Reality an ongoing social experience, unique to each user’s context.

Cross-Platform Computer Vision System

YouAR, of Portland, OR, is coming out of stealth with a product i.e. UberCV that addresses some of the most vexing problems in AR – convergent cross-platform computer vision (which is real-time interaction between ARKit and ARCore devices), interactivity of multiple AR apps in the same location across devices, real-time scene mapping, geometric occlusion of digital objects, localization of devices beyond GPS (the AR Cloud), and the bundle drop of digital assets into remote locations.

UberCV solves the temporary persistent problem of ARCore and ARKit and provides seamless persistent augmented reality experiences across both frameworks. Users can add new content in person or remotely, and when they open the app, everything is right where they left it. It uses computer vision to enable AR cloud localization, enabling apps to display content in the same position between multiple users. This technology provides the ability to create, discover, and associate content to AR “Anchors” anywhere in the world. They also have a patent (US10600249B2) behind this technology that discloses an augmented reality (AR) platform that supports the concurrent operation of multiple AR applications developed by third-party developers.

Conclusion

In the last two decades, a lot of innovations such as AR, VR, IoT, etc. have happened due to which the world looks vastly different from what we could have imagined just a decade ago.

While still in its early stages, the emergent metaverse provides an opportunity to connect the digital world with the real one and also helps users to connect multi-dimensionally with one another.

However, there are some hurdles like SLAM that companies will have to overcome so as to make Metaverse worldwide acceptable. As seen in this article, a number of big companies are innovating in this aspect and have come up with different solutions to overcome the problem of SLAM.

A lot of questions juggling through your head? Well, as our researchers dived deeper into the world of Metaverse, the information they gathered was too much for a single article. How about you reach out to us with your questions e.g. “which are the top start-ups innovating in this domain” and we will provide you with our insights. So, what are you waiting for?

Need professional guidance in strengthening your patent portfolio? We are just a click away!

Authored by: Akshay Agarwal (Senior Analyst) with the assistance of Nikhil Gupta (Group manager, Search team)